In the current age of technological progress, we’re in the process of creating Artificial Intelligence (AI), robots that can emulate humans, employing them in hospitals as receptionists to help patients, teachers in receptions and looking after our houses, elderly and other aspects of our lives.

But what about robots utilised in warfare? And who is legally responsible for these dangerous AIs?

Take 30 seconds to sign up to TLP and you’ll receive free, tailored information for your aspirations and stage straight to your inbox, as well as be the first to know about new, free events – what are you waiting for?

Where Have the “Killer Robots” Come From?

An interesting bit of trivia before we proceed, ‘robot’ is a term first coined in Czech playwright to describe metal slaves, which if you think about it is rather apt. Machines today have achieved for us what the Romans accomplished with human slaves.

This is including the proliferation of war. War has long been acknowledged as costly waste of human life, and equally a necessity. Now there are discussions suggesting that we should remove humans from the process, making warfare no longer morally expensive, and to achieve it, the military is spending billions.

What Form Do the AIs Take?

The military has long been a strong investor in research and development; one such invention in recent years has been the drone, and another the ‘MIT Cheetah robot’ or ‘robot dog’.

Think of these machines as a precursor to terminator-styled machines, able to operate independent of human input, able to overcome hurdles and possessing incredible strength, mobility and power to harm, as well as the ability to operate for a number of hours to weeks.

The Cheetah is more advanced than the standard drone as you can send it to follow objectives, similarly to Amazons driverless delivery machines except on a whole new level designed for war. It is yet to have been deployed into combat.

Comparatively, drones have seen combat – in fact, back in 2016 the CIA were using drones to deliberate target rescuers and funeral goers in Pakistan with similar tactics still in use elsewhere today.

At the time, the UN Special Rapporteur on extrajudicial killings described the usage as a ‘war crime’, and this view has been endorsed by UNHRC representatives. It was curtailed back then, but Trump has since acknowledged he will do anything to kill ‘terrorists’ and in these kinds of attacks it is often the case that innocents get hurt.

Are “Killer Robots” Really That Dangerous?

Since last year, scientists at UC Beckley, along with NGOs like Future of life Institute, have been calling for urgent legislation against “killer bots” to prevent any government or terror cell from taking advantage of the technology.

The counter to such claims is that in terms of war crimes, they will be reduced since artificial killer robots operate for an objective, and do not run wild, raping and pillaging as history dictates armies are wanton to do.

So Who Would Be Responsible for an AI’s War Crimes?

Let’s for a moment take on the role of devil’s advocate, and think about if robots, despite being calm and calculated, do commit a war crime, and a fully automated robot at that, then who would be held responsible?

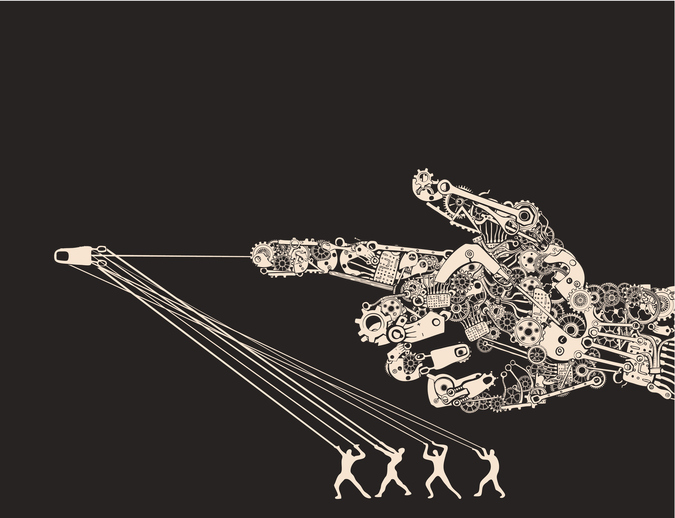

According to the Professor at Sheffield University and Co-Founder of the International Committee on Robot Arms Control Noel Sharkey, the answer is “no one”, not “computer programmes”, nor “manufacturers”, nor “military personnel”. Current loopholes in the law, Sharkey states, make it all too easy for commanders to “hide”, “blaming the software or the manufacturing process”.

Bonnie Docherty, in a report from the Harvard Law School’s International Human Rights Clinic, followed on from Sharkey by stating there simply is no avenue for criminal redress available; the ICC would, in effect, have no jurisdiction over international war crimes.

Sharkey does admit it may still be possible to sue manufacturers for compensation, but that is not quite the same as holding someone to account, which is rather concerning.

According to reporting done by The Guardian, there is currently a lab in South Korea University working on production of death machines. With this new leap lorded by The Times as ‘the New Arms Race’, the UN has decided to reopen talks on defining killer robots so that it can move towards legislating on them.

Understandably, the International Criminal Courts have made no comments on this area of the law just yet.

From an academic perspective, the Journal of International Affairs, in an article released in 2016 titled ‘Holding Killer Robots Accountable’, goes into detail in explaining that it would be hard to hold anyone accountable, unless there was proof that the manufacterers were aware of some sort of programming defect. This is due to not being able to pinpoint, in court, the emotions or motivations of an AI with “no human at the helm”.

This becomes impossible with regards to fully autonomous machines, meaning a huge gap has emerged in international law on the responsibility of autonomous machines. The author recommends the possible creation of ethical guidelines to address this.

For the time being, this area of the law is still developing so for those interested in international criminal law it is something to keep an eye on.

Want to know more about technology and law? Read these next:

- Law and Technology: The Lawyer of the Future

- Is Artificial Intelligence Good or Bad for Lawyers?

- Artificial Intelligence Law: Is AI Taking Over?

Published: 04/05/18 Author: Cameron Haden